Ollama Installation

Ollama Installation 관련

Ollama is an open-sourced tool you can use to run and manage LLMs on your computer. Once installed, you can access various LLMs as per your needs. You will be using llama3.2:3b-instruct-q4_K_M model to build this chatbot.

A quantized model is a version of a machine learning model that has been optimized to use less memory and computational power by reducing the precision of the numbers it uses. This enables you to use an LLM locally, especially when you don’t have access to a GPU (Graphics Processing Unit - a specialized processor that perform complex computations).

To start, you can download and install the Ollama software here.

Then you can confirm installation by running this command:

ollama --version

Run the following command to start Ollama:

ollama serve

Next, run the following command to pull the Q4_K_M quantization of llama3.2:3b-instruct:

ollama pull llama3.2:3b-instruct-q4_K_M

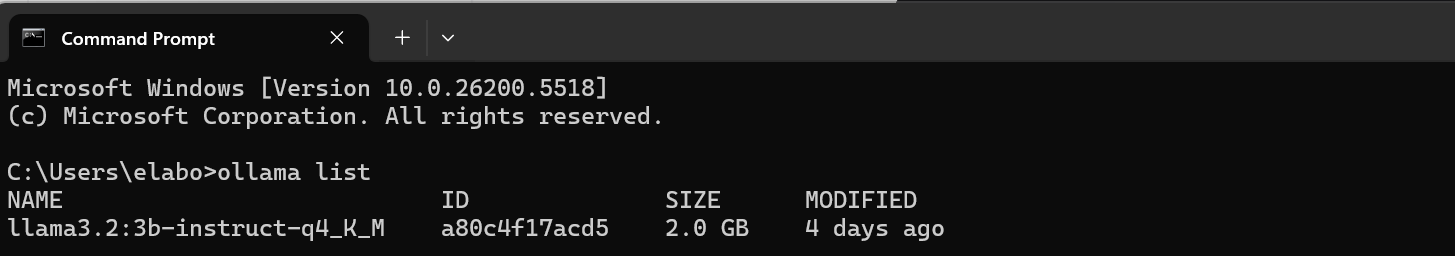

Then confirm that the model was extracted with this:

ollama list

If the model extraction was successful, a list containing the model’s name, ID, and size will be returned, like so:

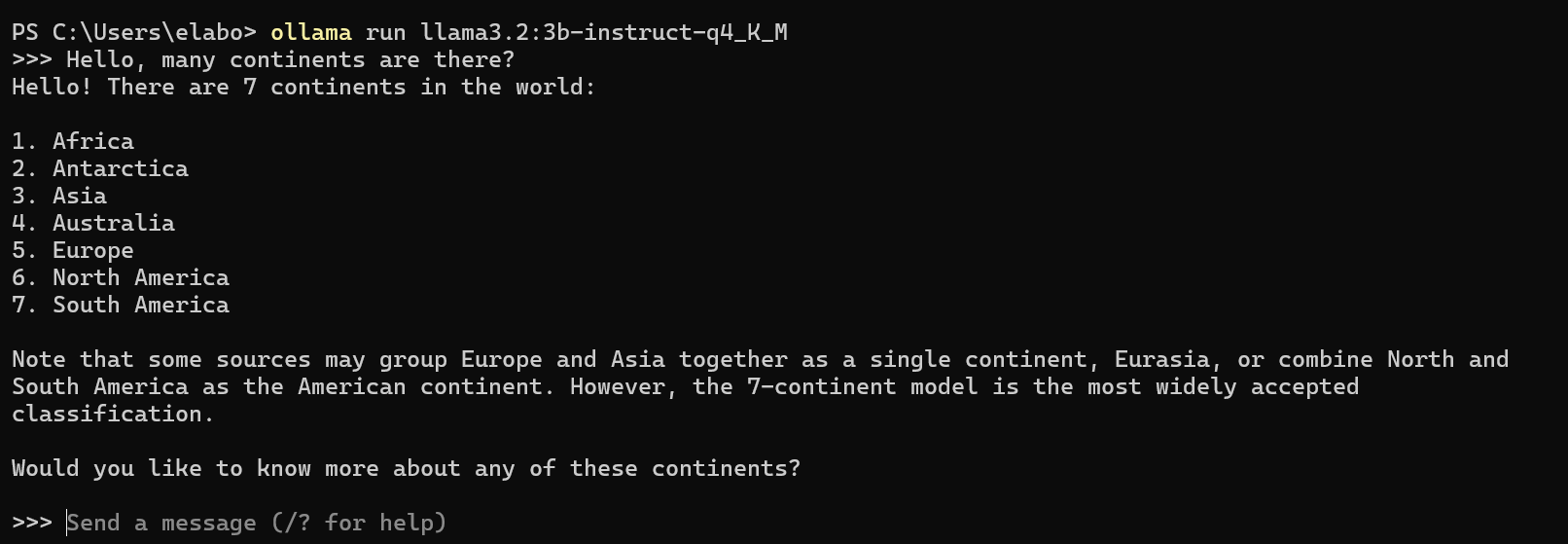

Now you can chat with the model:

ollama run llama3.2:3b-instruct-q4_K_M

If successful, you should receive a prompt that you can test by asking a question and getting an answer. For example:

Then you can exit the console by typing /bye or Ctrl+D