Build a Stable Diffusion VAE From Scratch using Pytorch

Build a Stable Diffusion VAE From Scratch using Pytorch 관련

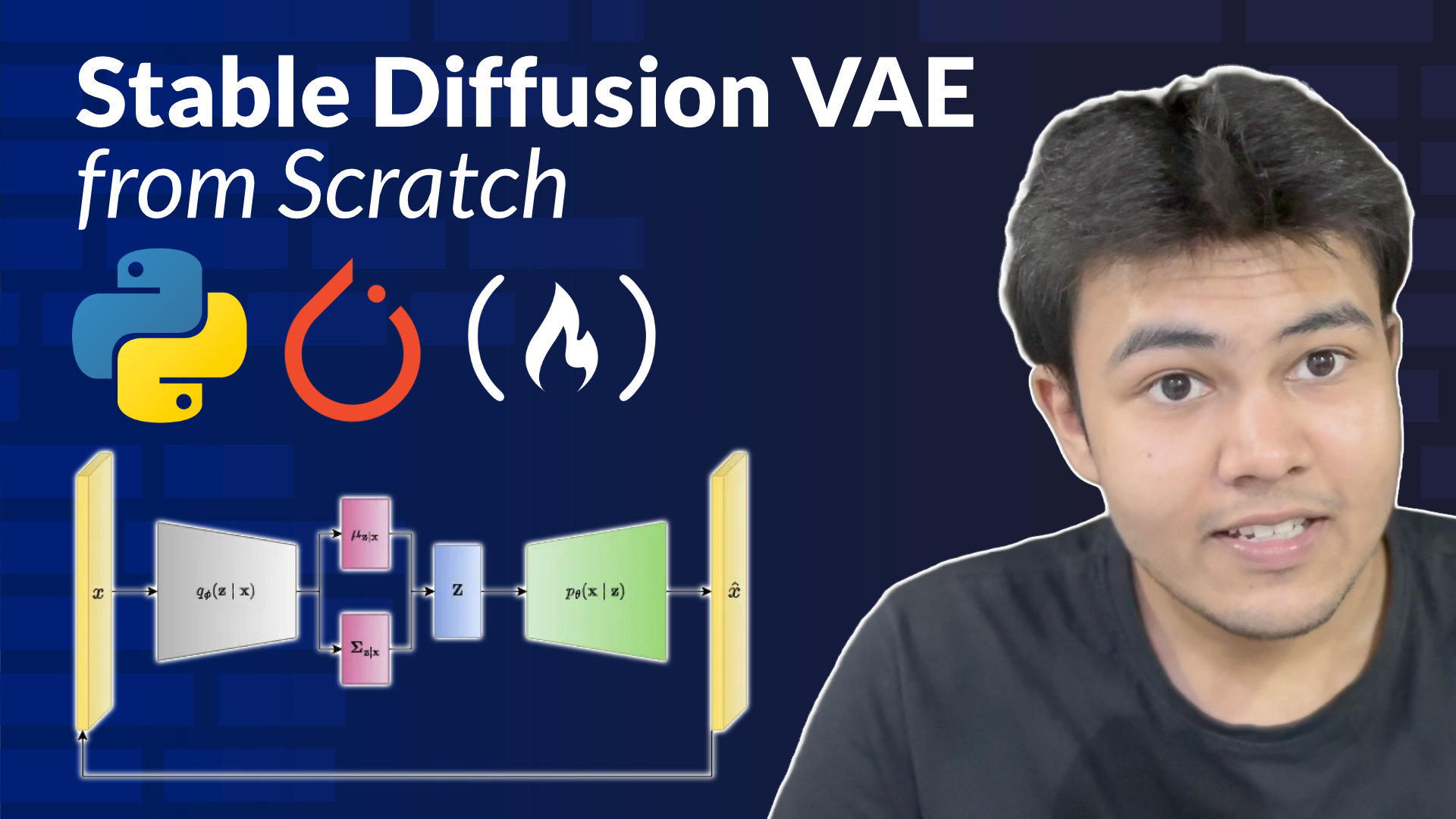

We just published a course on the freeCodeCamp.org YouTube channel that will teach you everything you need to know about Variational Autoencoders (VAEs). This course is perfect for anyone looking to dive deep into one of the fundamental concepts behind modern image generation techniques, such as those used in latent diffusion models and GANs. Harsh Bhatt developed this course. He is a machine learning engineer.

VAEs are a special type of autoencoder that work with probability distributions instead of fixed points in the latent space. This capability allows VAEs to learn and represent the variability in datasets, such as the different ways the digit "7" might appear in handwritten forms. By learning a mean (μ) and standard deviation (σ), the VAE effectively captures the distribution of the data, making it an essential tool for applications in generative modeling and unsupervised learning.

Why Learn Variational Autoencoders?

VAEs are more than just a stepping stone to understanding image generation. They solve key challenges in dimensionality reduction and data representation. Unlike traditional autoencoders, which focus on compressing data into a fixed latent representation, VAEs leverage probabilistic methods to create smoother and more meaningful latent spaces. This makes them particularly useful for tasks like:

- Image synthesis: Generating realistic and diverse images.

- Data augmentation: Creating new data samples for training.

- Anomaly detection: Identifying outliers in data distributions.

What You'll Learn in This Course

This comprehensive course begins by introducing the basic concepts of autoencoders, including the encoder-decoder architecture. You'll then delve into the differences between standard autoencoders and VAEs, learning why encoding data into probability distributions is a game changer. Key topics covered include:

- Latent space representation: How VAEs group similar data points into clusters within the latent space.

- The reparameterization trick: Enabling gradient-based optimization by representing random variables in a differentiable way.

- Loss functions for VAEs: Combining reconstruction loss and KL divergence to optimize the model.

- Implementation with PyTorch: Hands-on coding to build and train your own VAE from scratch.

Hands-On Implementation

The course takes you step by step through implementing a VAE using PyTorch, starting with the encoder and decoder architecture. You’ll learn how to:

- Encode images into a latent representation.

- Decode the latent vectors to reconstruct the original images.

- Optimize the model using reconstruction loss and KL divergence.

- Visualize and interpret the latent space.

You’ll also gain insights into advanced techniques like self-attention layers for encoding context and residual blocks for efficient neural network training.

Conclusion

Ready to start your journey into generative modeling? Watch the course now on freeCodeCamp.org's YouTube channel and get hands-on with Variational Autoencoders!