Chapter 7: The Future of AI Agents and LLMs

Chapter 7: The Future of AI Agents and LLMs 관련

Convergence of LLMs with Reinforcement Learning

As you explore the future of AI agents and Large Language Models (LLMs), the convergence of LLMs with reinforcement learning stands out as a particularly transformative development. This integration pushes the boundaries of traditional AI by enabling systems to not only generate and understand language but also to learn from their interactions in real-time.

Through reinforcement learning, AI agents can adaptively modify their strategies based on feedback from their environment, resulting in a continuous refinement of their decision-making processes. This means that, unlike static models, AI systems enhanced with reinforcement learning can handle increasingly complex and dynamic tasks with minimal human oversight.

The implications for such systems are profound: in applications ranging from autonomous robotics to personalized education, AI agents could autonomously improve their performance over time, making them more efficient and responsive to the evolving demands of their operational contexts.

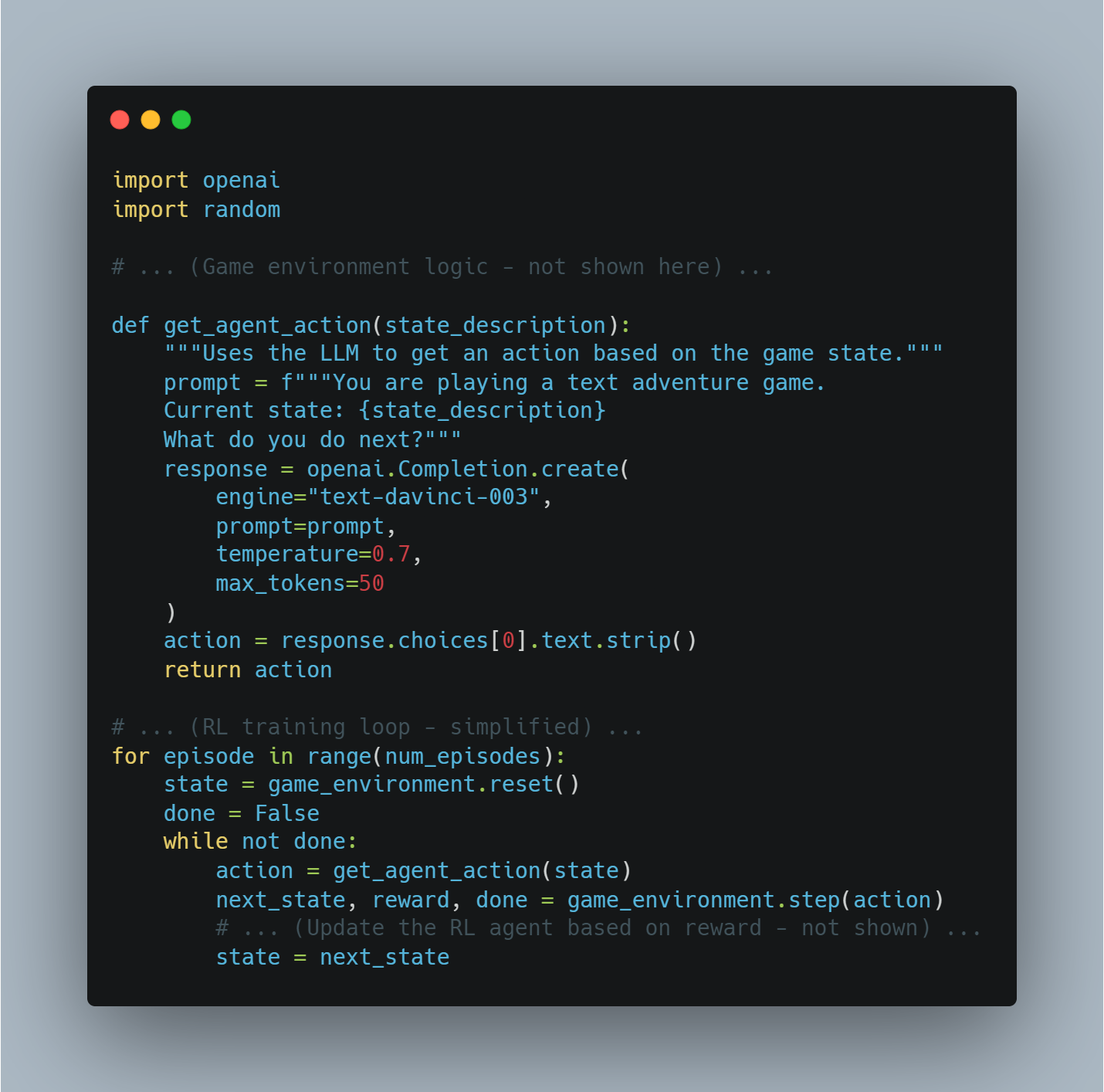

Example: Text-Based Game Playing

Imagine an AI agent playing a text-based adventure game.

- Environment: The game itself (rules, state descriptions, and so on)

- LLM: Processes the game's text, understands the current situation, and generates possible actions (for example, "go north", "take sword").

- Reward: Given by the game based on the outcome of the action (for example, positive reward for finding treasure, negative for losing health).

Code Example (Conceptual using Python and OpenAI's API)

import openai

import random

# ... (Game environment logic - not shown here) ...

def get_agent_action(state_description):

"""Uses the LLM to get an action based on the game state."""

prompt = f"""You are playing a text adventure game.

Current state: {state_description}

What do you do next?"""

response = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

temperature=0.7,

max_tokens=50

)

action = response.choices[0].text.strip()

return action

# ... (RL training loop - simplified) ...

for episode in range(num_episodes):

state = game_environment.reset()

done = False

while not done:

action = get_agent_action(state)

next_state, reward, done = game_environment.step(action)

# ... (Update the RL agent based on reward - not shown) ...

state = next_state

openai and random libraries. It defines a function get_agent_action that uses the OpenAI GPT model (text-davinci-003) to generate an action for a text-based adventure game based on the current state. The script also includes a simplified reinforcement learning (RL) training loop where the agent interacts with the game environment to learn optimal actions.Multimodal AI Integration

The integration of multimodal AI is another critical trend shaping the future of AI agents. By enabling systems to process and combine data from various sources—such as text, images, audio, and sensory inputs—multimodal AI offers a more comprehensive understanding of the environments in which these systems operate.

For instance, in autonomous vehicles, the ability to synthesize visual data from cameras, contextual data from maps, and real-time traffic updates allows the AI to make more informed and safer driving decisions.

This capability extends to other domains like healthcare, where an AI agent could integrate patient data from medical records, diagnostic imaging, and genomic information to deliver more accurate and personalized treatment recommendations.

The challenge here lies in the seamless integration and real-time processing of diverse data streams, which requires advances in model architecture and data fusion techniques.

Successfully overcoming these challenges will be pivotal in deploying AI systems that are truly intelligent and capable of functioning in complex, real-world environments.

Multimodal AI example 1: Image Captioning for Visual Question Answering

- Goal: An AI agent that can answer questions about images.

- Modalities: Image, Text

- Process:

- Image Feature Extraction: Use a pre-trained Convolutional Neural Network (CNN) to extract features from the image.

- Caption Generation: Use an LLM (like a Transformer model) to generate a caption describing the image based on the extracted features.

- Question Answering: Use another LLM to process both the question and the generated caption to provide an answer.

Code Example (Conceptual using Python and Hugging Face Transformers):

from transformers import ViTFeatureExtractor, VisionEncoderDecoderModel, AutoTokenizer, AutoModelForQuestionAnswering

from PIL import Image

import requests

# Load pre-trained models

image_model_name = "nlpconnect/vit-gpt2-image-captioning"

feature_extractor = ViTFeatureExtractor.from_pretrained(image_model_name)

image_caption_model = VisionEncoderDecoderModel.from_pretrained(image_model_name)

qa_model_name = "distilbert-base-cased-distilled-squad"

qa_tokenizer = AutoTokenizer.from_pretrained(qa_model_name)

qa_model = AutoModelForQuestionAnswering.from_pretrained(qa_model_name)

# Function to generate image caption

def generate_caption(image_url):

image = Image.open(requests.get(image_url, stream=True).raw)

pixel_values = feature_extractor(images=image, return_tensors="pt").pixel_values

generated_caption = image_caption_model.generate(pixel_values, max_length=50, num_beams=4, early_stopping=True)

caption = tokenizer.decode(generated_caption[0], skip_special_tokens=True)

return caption

# Function to answer questions about the image

def answer_question(question, caption):

inputs = qa_tokenizer(question, caption, add_special_tokens=True, return_tensors="pt")

input_ids = inputs["input_ids"].tolist()[0]

outputs = qa_model(**inputs)

answer_start_scores = outputs.start_logits

answer_end_scores = outputs.end_logits

answer_start = torch.argmax(answer_start_scores)

answer_end = torch.argmax(answer_end_scores) + 1

answer = qa_tokenizer.convert_tokens_to_string(qa_tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end]))

return answer

# Example usage

image_url = "https://example.com/image.jpg"

caption = generate_caption(image_url)

question = "What is in the image?"

answer = answer_question(question, caption)

print(f"Caption: {caption}")

print(f"Answer: {answer}")

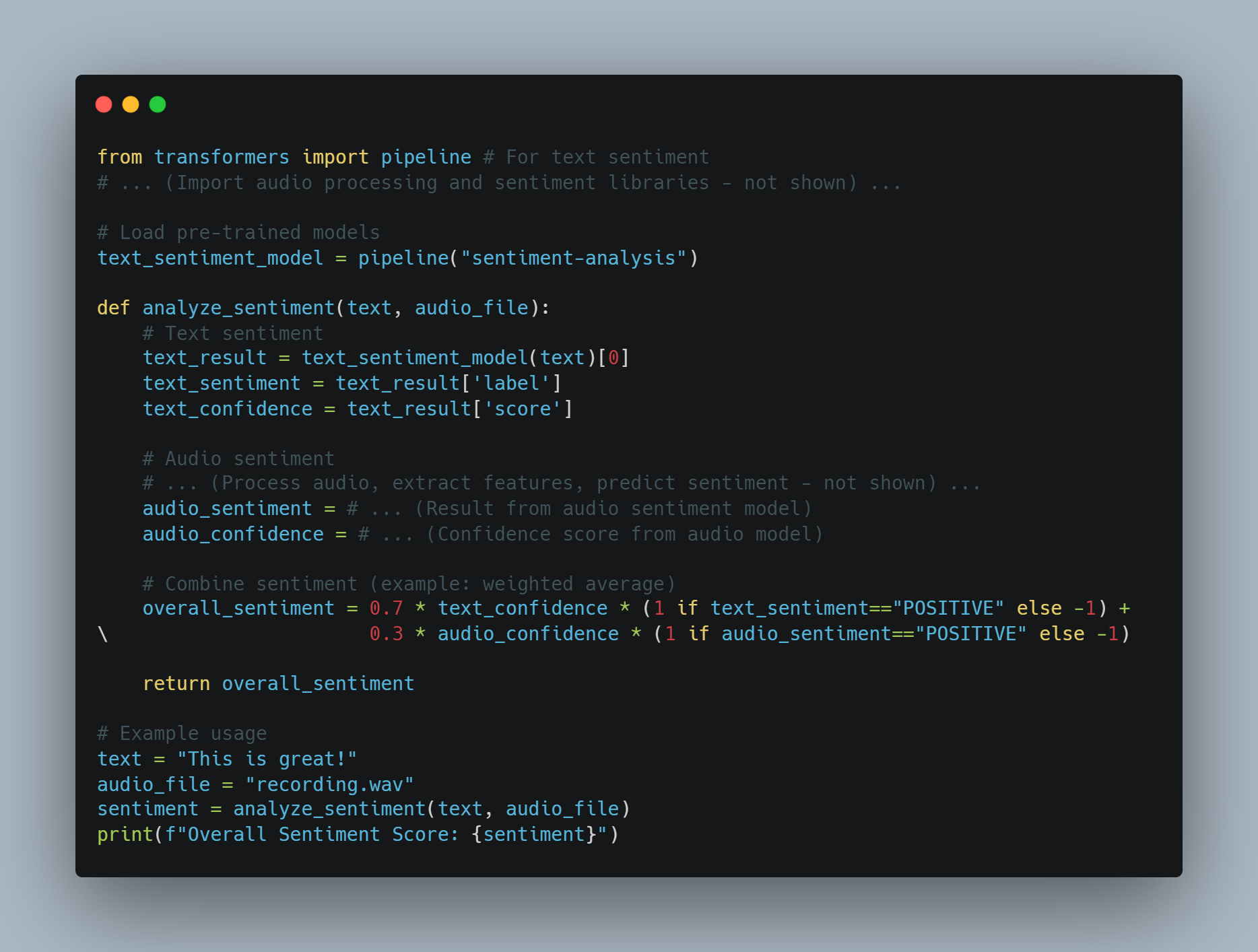

Multimodal AI example 2: Sentiment Analysis from Text and Audio

- Goal: An AI agent that analyzes sentiment from both the text and tone of a message.

- Modalities: Text, Audio

- Process:

- Text Sentiment: Use a pre-trained sentiment analysis model on the text.

- Audio Sentiment: Use an audio processing model to extract features like tone and pitch, then use these features to predict sentiment.

- Fusion: Combine the text and audio sentiment scores (for example, weighted average) to get the overall sentiment.

Code Example (Conceptual using Python):

from transformers import pipeline # For text sentiment

# ... (Import audio processing and sentiment libraries - not shown) ...

# Load pre-trained models

text_sentiment_model = pipeline("sentiment-analysis")

def analyze_sentiment(text, audio_file):

# Text sentiment

text_result = text_sentiment_model(text)[0]

text_sentiment = text_result['label']

text_confidence = text_result['score']

# Audio sentiment

# ... (Process audio, extract features, predict sentiment - not shown) ...

audio_sentiment = # ... (Result from audio sentiment model)

audio_confidence = # ... (Confidence score from audio model)

# Combine sentiment (example: weighted average)

overall_sentiment = 0.7 * text_confidence * (1 if text_sentiment=="POSITIVE" else -1) + \

0.3 * audio_confidence * (1 if audio_sentiment=="POSITIVE" else -1)

return overall_sentiment

# Example usage

text = "This is great!"

audio_file = "recording.wav"

sentiment = analyze_sentiment(text, audio_file)

print(f"Overall Sentiment Score: {sentiment}")

analyze_sentiment which combines text and audio sentiment results. The code includes an example usage with a text input 'This is great!' and an audio file named 'recording.wav', and prints the overall sentiment score.Challenges and Considerations:

- Data Alignment: Ensuring that data from different modalities is synchronized and aligned is crucial.

- Model Complexity: Multimodal models can be complex to train and require large, diverse datasets.

- Fusion Techniques: Choosing the right method to combine information from different modalities is important and problem-specific.

Multimodal AI is a rapidly evolving field with the potential to revolutionize how AI agents perceive and interact with the world.

Distributed AI Systems and Edge Computing

Looking towards the evolution of AI infrastructures, the shift towards distributed AI systems, supported by edge computing, represents a significant advancement.

Distributed AI systems decentralize computational tasks by processing data closer to the source—such as IoT devices or local servers—rather than relying on centralized cloud resources. This approach not only reduces latency, which is crucial for time-sensitive applications like autonomous drones or industrial automation, but also enhances data privacy and security by keeping sensitive information local.

Also, distributed AI systems improve scalability, allowing for the deployment of AI across vast networks, such as smart cities, without overwhelming centralized data centers.

The technical challenges associated with distributed AI include ensuring consistency and coordination across distributed nodes, as well as optimizing resource allocation to maintain performance across diverse and potentially resource-constrained environments.

As you develop and deploy AI systems, embracing distributed architectures will be key to creating resilient, efficient, and scalable AI solutions that meet the demands of future applications.

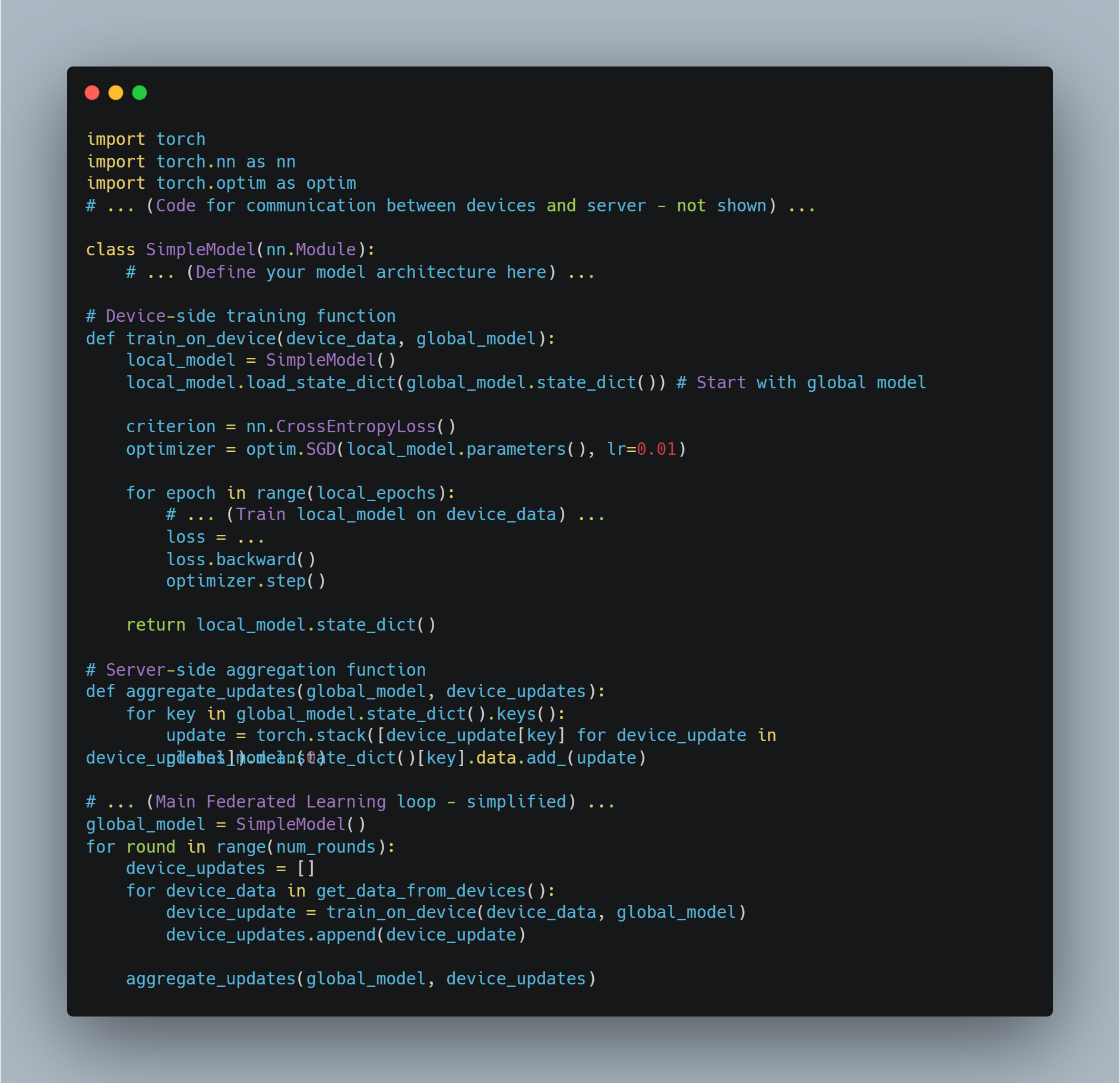

Distributed AI Systems and Edge Computing example 1: Federated Learning for Privacy-Preserving Model Training

- Goal: Train a shared model across multiple devices (for example, smartphones) without directly sharing sensitive user data.

- Approach:

- Local Training: Each device trains a local model on its own data.

- Parameter Aggregation: Devices send model updates (gradients or parameters) to a central server.

- Global Model Update: The server aggregates the updates, improves the global model, and sends the updated model back to the devices.

Code Example (Conceptual using Python and PyTorch):

import torch

import torch.nn as nn

import torch.optim as optim

# ... (Code for communication between devices and server - not shown) ...

class SimpleModel(nn.Module):

# ... (Define your model architecture here) ...

# Device-side training function

def train_on_device(device_data, global_model):

local_model = SimpleModel()

local_model.load_state_dict(global_model.state_dict()) # Start with global model

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(local_model.parameters(), lr=0.01)

for epoch in range(local_epochs):

# ... (Train local_model on device_data) ...

loss = ...

loss.backward()

optimizer.step()

return local_model.state_dict()

# Server-side aggregation function

def aggregate_updates(global_model, device_updates):

for key in global_model.state_dict().keys():

update = torch.stack([device_update[key] for device_update in device_updates]).mean(0)

global_model.state_dict()[key].data.add_(update)

# ... (Main Federated Learning loop - simplified) ...

global_model = SimpleModel()

for round in range(num_rounds):

device_updates = []

for device_data in get_data_from_devices():

device_update = train_on_device(device_data, global_model)

device_updates.append(device_update)

aggregate_updates(global_model, device_updates)

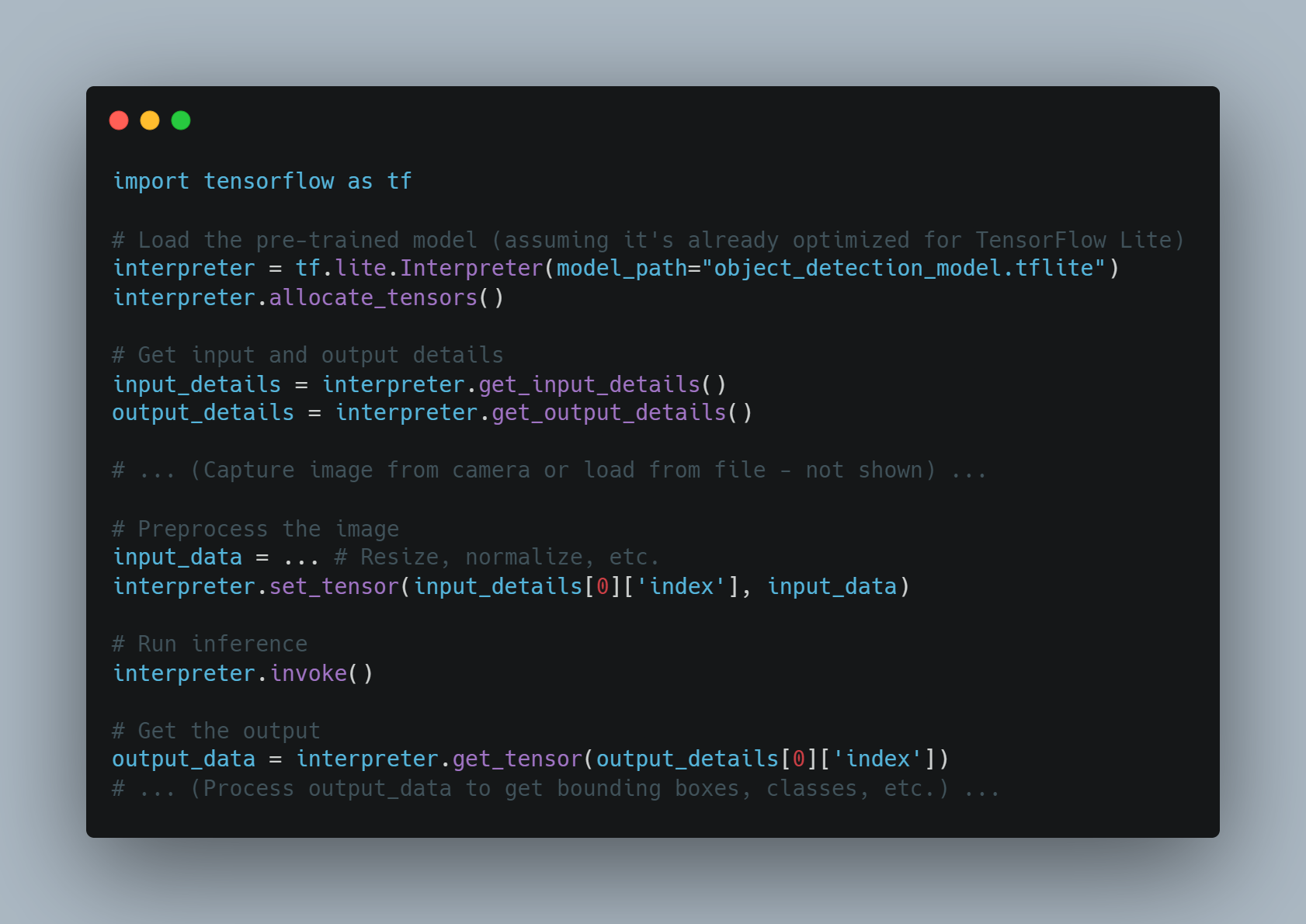

Example 2: Real-Time Object Detection on Edge Devices

- Goal: Deploy an object detection model on a resource-constrained device (for example, Raspberry Pi) for real-time inference.

- Approach:

- Model Optimization: Use techniques like model quantization or pruning to reduce the model size and computational requirements.

- Edge Deployment: Deploy the optimized model to the edge device.

- Local Inference: The device performs object detection locally, reducing latency and reliance on cloud communication.

Code Example (Conceptual using Python and TensorFlow Lite):

import tensorflow as tf

# Load the pre-trained model (assuming it's already optimized for TensorFlow Lite)

interpreter = tf.lite.Interpreter(model_path="object_detection_model.tflite")

interpreter.allocate_tensors()

# Get input and output details

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# ... (Capture image from camera or load from file - not shown) ...

# Preprocess the image

input_data = ... # Resize, normalize, etc.

interpreter.set_tensor(input_details[0]['index'], input_data)

# Run inference

interpreter.invoke()

# Get the output

output_data = interpreter.get_tensor(output_details[0]['index'])

# ... (Process output_data to get bounding boxes, classes, etc.) ...

Challenges and Considerations:

- Communication Overhead: Efficiently coordinating and communicating between distributed nodes is crucial.

- Resource Management: Optimizing resource allocation (CPU, memory, bandwidth) across devices is important.

- Security: Securing distributed systems and protecting data privacy are paramount concerns.

Distributed AI and edge computing are essential for building scalable, efficient, and privacy-aware AI systems, especially as we move towards a future with billions of interconnected devices.

Advancements in Natural Language Processing

Natural Language Processing (NLP) continues to be at the forefront of AI advancements, driving significant improvements in how machines understand, generate, and interact with human language.

Recent developments in NLP, such as the evolution of transformers and attention mechanisms, have drastically enhanced the ability of AI to process complex linguistic structures, making interactions more natural and contextually aware.

This progress has enabled AI systems to understand nuances, sentiments, and even cultural references within text, leading to more accurate and meaningful communication.

For instance, in customer service, advanced NLP models can not only handle queries with precision but also detect emotional cues from customers, enabling more empathetic and effective responses.

Looking ahead, the integration of multilingual capabilities and deeper semantic understanding in NLP models will further expand their applicability, allowing for seamless communication across different languages and dialects, and even enabling AI systems to serve as real-time translators in diverse global contexts.

Natural Language Processing (NLP) is rapidly evolving, with breakthroughs in areas like transformer models and attention mechanisms. Here are some examples and code snippets to illustrate these advancements:

NLP example 1: Sentiment Analysis with Fine-tuned Transformers

- Goal: Analyze the sentiment of text with high accuracy, capturing nuances and context.

- Approach: Fine-tune a pre-trained transformer model (like BERT) on a sentiment analysis dataset.

Code Example (using Python and Hugging Face Transformers):

from transformers import AutoModelForSequenceClassification, Trainer, TrainingArguments

from datasets import load_dataset

# Load pre-trained model and dataset

model_name = "bert-base-uncased"

model = AutoModelForSequenceClassification.from_pretrained(model_name, num_labels=3) # 3 labels: Positive, Negative, Neutral

dataset = load_dataset("imdb", split="train[:10%]")

# Define training arguments

training_args = TrainingArguments(

output_dir="./results",

num_train_epochs=3,

per_device_train_batch_size=8,

)

# Fine-tune the model

trainer = Trainer(model=model, args=training_args, train_dataset=dataset)

trainer.train()

# Save the fine-tuned model

model.save_pretrained("./fine_tuned_sentiment_model")

# Load the fine-tuned model for inference

from transformers import pipeline

sentiment_classifier = pipeline("sentiment-analysis", model="./fine_tuned_sentiment_model")

# Example usage

text = "This movie was absolutely amazing! I loved the plot and the characters."

result = sentiment_classifier(text)[0]

print(f"Sentiment: {result['label']}, Confidence: {result['score']:.4f}")

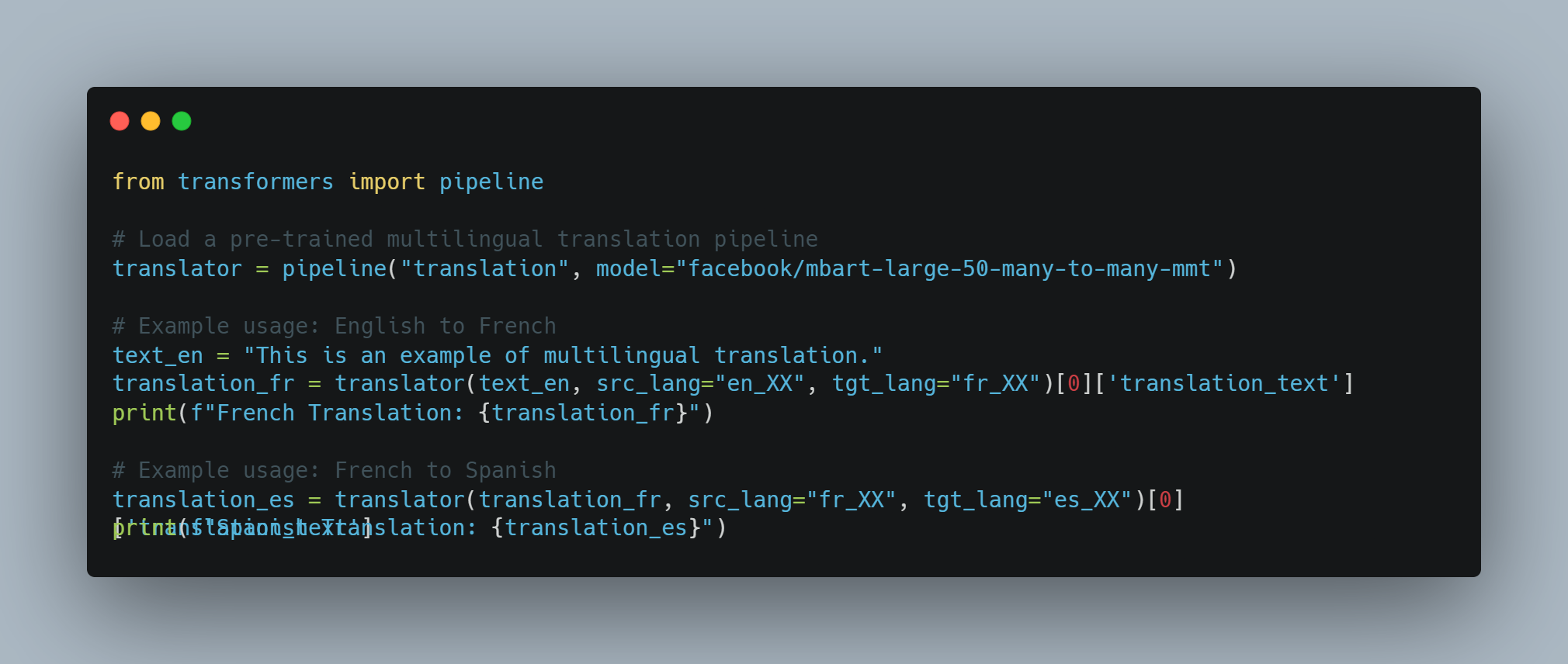

NLP Example 2: Multilingual Machine Translation with a Single Model

- Goal: Translate between multiple languages using a single model, leveraging shared linguistic representations.

- Approach: Use a large, multilingual transformer model (like mBART or XLM-R) that has been trained on a massive dataset of parallel text in multiple languages.

Code Example (using Python and Hugging Face Transformers):

from transformers import pipeline

# Load a pre-trained multilingual translation pipeline

translator = pipeline("translation", model="facebook/mbart-large-50-many-to-many-mmt")

# Example usage: English to French

text_en = "This is an example of multilingual translation."

translation_fr = translator(text_en, src_lang="en_XX", tgt_lang="fr_XX")[0]['translation_text']

print(f"French Translation: {translation_fr}")

# Example usage: French to Spanish

translation_es = translator(translation_fr, src_lang="fr_XX", tgt_lang="es_XX")[0]['translation_text']

print(f"Spanish Translation: {translation_es}")

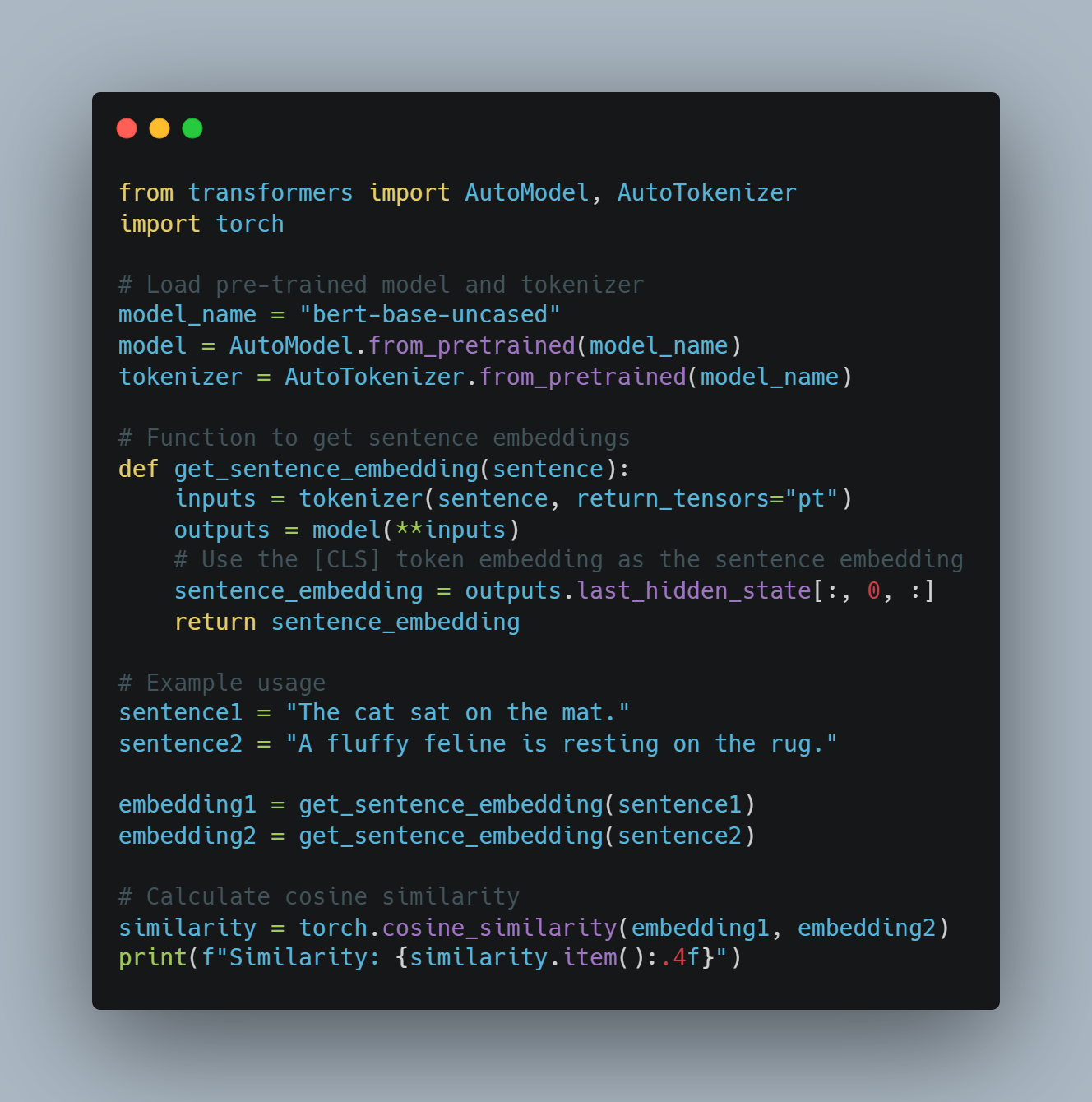

transformers library for multilingual translation. The code loads a pre-trained multilingual translation pipeline from Facebook's mBART model and shows examples of translating text from English to French and then from French to Spanish.NLP Example 3: Contextual Word Embeddings for Semantic Similarity

- Goal: Determine the similarity between words or sentences, taking context into account.

- Approach: Use a transformer model (like BERT) to generate contextual word embeddings, which capture the meaning of words within a specific sentence.

Code Example (using Python and Hugging Face Transformers):

from transformers import AutoModel, AutoTokenizer

import torch

# Load pre-trained model and tokenizer

model_name = "bert-base-uncased"

model = AutoModel.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Function to get sentence embeddings

def get_sentence_embedding(sentence):

inputs = tokenizer(sentence, return_tensors="pt")

outputs = model(**inputs)

# Use the [CLS] token embedding as the sentence embedding

sentence_embedding = outputs.last_hidden_state[:, 0, :]

return sentence_embedding

# Example usage

sentence1 = "The cat sat on the mat."

sentence2 = "A fluffy feline is resting on the rug."

embedding1 = get_sentence_embedding(sentence1)

embedding2 = get_sentence_embedding(sentence2)

# Calculate cosine similarity

similarity = torch.cosine_similarity(embedding1, embedding2)

print(f"Similarity: {similarity.item():.4f}")

Challenges and Future Directions:

- Bias and Fairness: NLP models can inherit biases from their training data, leading to unfair or discriminatory outcomes. Addressing bias is crucial.

- Common Sense Reasoning: LLMs still struggle with common sense reasoning and understanding implicit information.

- Explainability: The decision-making process of complex NLP models can be opaque, making it difficult to understand why they generate certain outputs.

Despite these challenges, NLP is rapidly advancing. The integration of multimodal information, improved common sense reasoning, and enhanced explainability are key areas of ongoing research that will further revolutionize how AI interacts with human language.

Personalized AI Assistants

The future of personalized AI assistants is poised to become increasingly sophisticated, moving beyond basic task management to truly intuitive, proactive support tailored to individual needs.

These assistants will leverage advanced machine learning algorithms to continuously learn from your behaviors, preferences, and routines, offering increasingly personalized recommendations and automating more complex tasks.

For example, a personalized AI assistant could manage not only your schedule but also anticipate your needs by suggesting relevant resources or adjusting your environment based on your mood or past preferences.

As AI assistants become more integrated into daily life, their ability to adapt to changing contexts and provide seamless, cross-platform support will become a key differentiator. The challenge lies in balancing personalization with privacy, requiring robust data protection mechanisms to ensure that sensitive information is managed securely while delivering a deeply personalized experience.

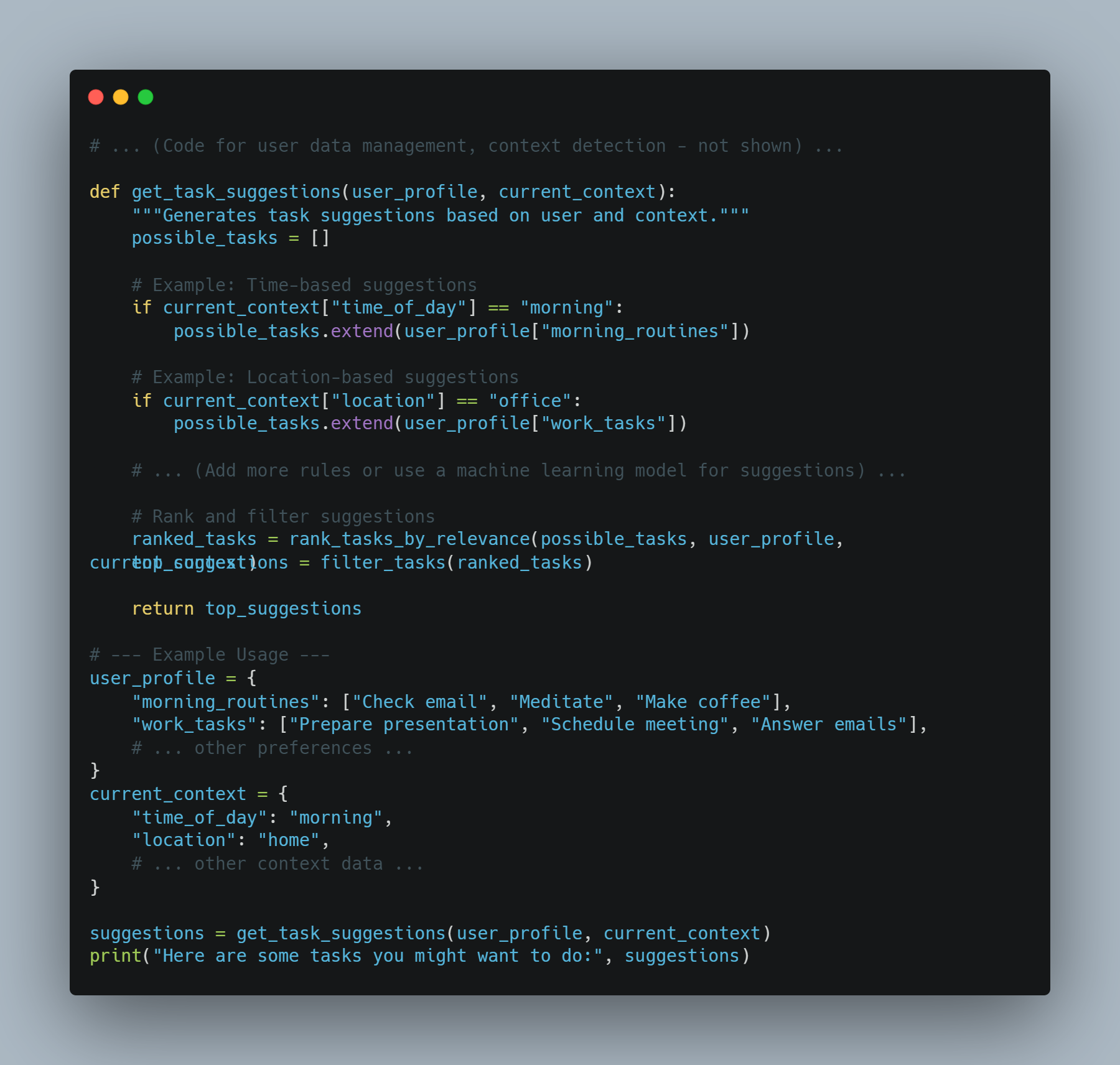

AI Assistants example 1: Context-Aware Task Suggestion

- Goal: An assistant that suggests tasks based on the user's current context (location, time, past behavior).

- Approach: Combine user data, contextual signals, and a task recommendation model.

Code Example (Conceptual using Python):

# ... (Code for user data management, context detection - not shown) ...

def get_task_suggestions(user_profile, current_context):

"""Generates task suggestions based on user and context."""

possible_tasks = []

# Example: Time-based suggestions

if current_context["time_of_day"] == "morning":

possible_tasks.extend(user_profile["morning_routines"])

# Example: Location-based suggestions

if current_context["location"] == "office":

possible_tasks.extend(user_profile["work_tasks"])

# ... (Add more rules or use a machine learning model for suggestions) ...

# Rank and filter suggestions

ranked_tasks = rank_tasks_by_relevance(possible_tasks, user_profile, current_context)

top_suggestions = filter_tasks(ranked_tasks)

return top_suggestions

# --- Example Usage ---

user_profile = {

"morning_routines": ["Check email", "Meditate", "Make coffee"],

"work_tasks": ["Prepare presentation", "Schedule meeting", "Answer emails"],

# ... other preferences ...

}

current_context = {

"time_of_day": "morning",

"location": "home",

# ... other context data ...

}

suggestions = get_task_suggestions(user_profile, current_context)

print("Here are some tasks you might want to do:", suggestions)

get_task_suggestions. The function generates task suggestions based on user profile and current context, such as time of day or location. Example user profiles and contexts are defined, and the function is called to produce task suggestions which are then printed.AI Assistants example 2: Proactive Information Delivery

- Goal: An assistant that proactively provides relevant information based on user's schedule and preferences.

- Approach: Integrate calendar data, user interests, and a content retrieval system.

Code Example (Conceptual using Python):

# ... (Code for calendar access, user interest profile - not shown) ...

def get_relevant_info(user_profile, calendar_events):

"""Retrieves information relevant to upcoming events."""

relevant_info = []

for event in calendar_events:

if "meeting" in event["title"].lower():

# ... (Retrieve company info, participant profiles, etc.) ...

relevant_info.append(f"Meeting '{event['title']}': {meeting_info}")

elif "travel" in event["title"].lower():

# ... (Retrieve flight status, destination info, etc.) ...

relevant_info.append(f"Trip '{event['title']}': {travel_info}")

return relevant_info

# --- Example Usage ---

calendar_events = [

{"title": "Team Meeting", "time": "10:00 AM"},

{"title": "Flight to New York", "time": "6:00 PM"}

]

user_profile = {

"interests": ["technology", "travel", "business"]

# ... other preferences ...

}

info = get_relevant_info(user_profile, calendar_events)

for item in info:

print(item)

get_relevant_info function, sample calendar_events and user_profile dictionaries, and a demonstration of function usage with printing the results.AI Assistants example 3: Personalized Content Recommendation

- Goal: An assistant that recommends content (articles, videos, music) tailored to user preferences.

- Approach: Use collaborative filtering or content-based recommendation systems.

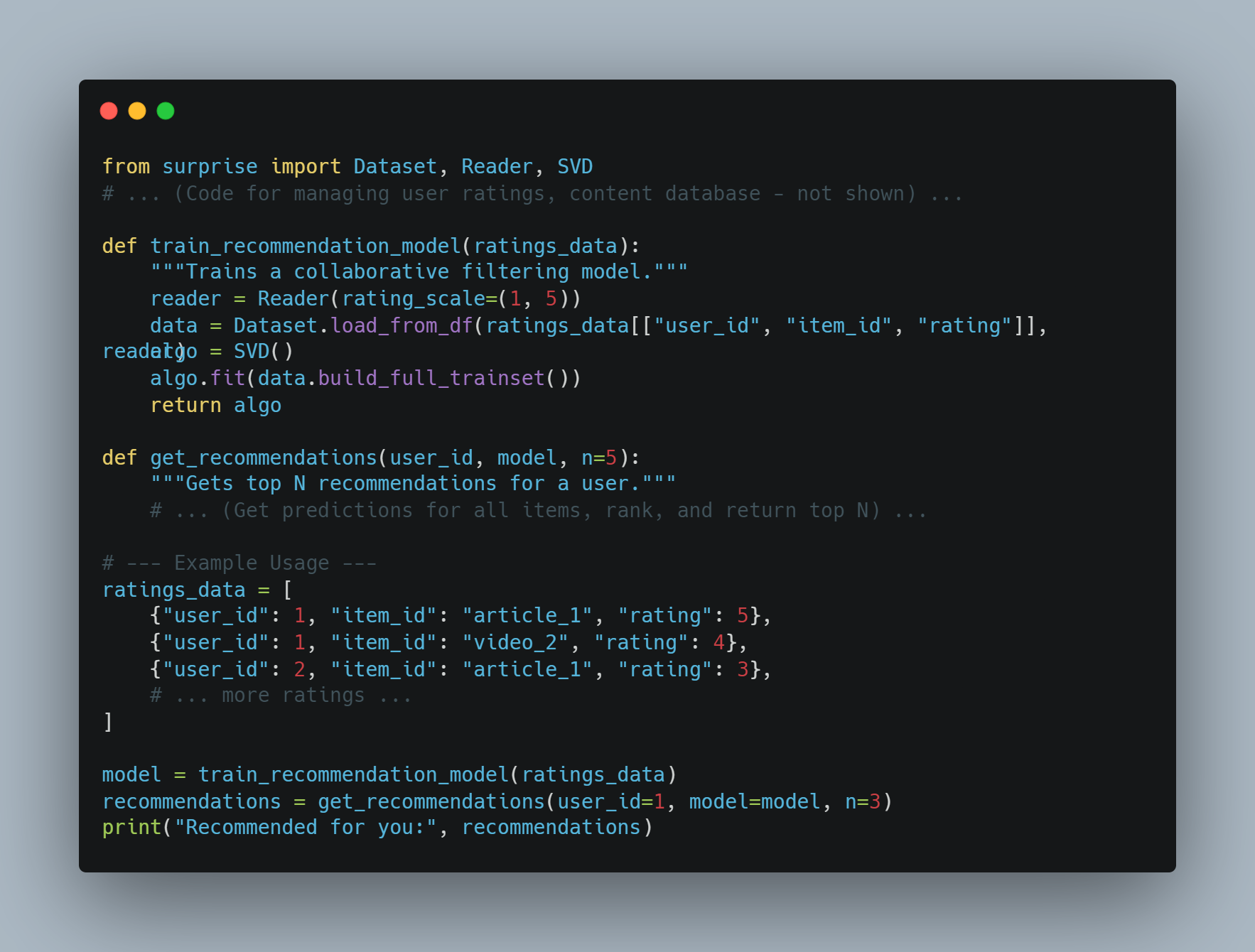

Code Example (Conceptual using Python and a library like Surprise):

from surprise import Dataset, Reader, SVD

# ... (Code for managing user ratings, content database - not shown) ...

def train_recommendation_model(ratings_data):

"""Trains a collaborative filtering model."""

reader = Reader(rating_scale=(1, 5))

data = Dataset.load_from_df(ratings_data[["user_id", "item_id", "rating"]], reader)

algo = SVD()

algo.fit(data.build_full_trainset())

return algo

def get_recommendations(user_id, model, n=5):

"""Gets top N recommendations for a user."""

# ... (Get predictions for all items, rank, and return top N) ...

# --- Example Usage ---

ratings_data = [

{"user_id": 1, "item_id": "article_1", "rating": 5},

{"user_id": 1, "item_id": "video_2", "rating": 4},

{"user_id": 2, "item_id": "article_1", "rating": 3},

# ... more ratings ...

]

model = train_recommendation_model(ratings_data)

recommendations = get_recommendations(user_id=1, model=model, n=3)

print("Recommended for you:", recommendations)

train_recommendation_model) using user ratings data, and another to get recommendations (get_recommendations). An example usage illustrates how to train the model with sample ratings_data and retrieve recommendations for a user with ID 1.Challenges and Ethical Considerations:

- Data Privacy: Handling user data responsibly and transparently is crucial.

- Bias and Fairness: Personalization should not amplify existing biases.

- User Control: Users should have control over their data and personalization settings.

Building personalized AI assistants requires careful consideration of both technical and ethical aspects to create systems that are helpful, trustworthy, and respect user privacy.

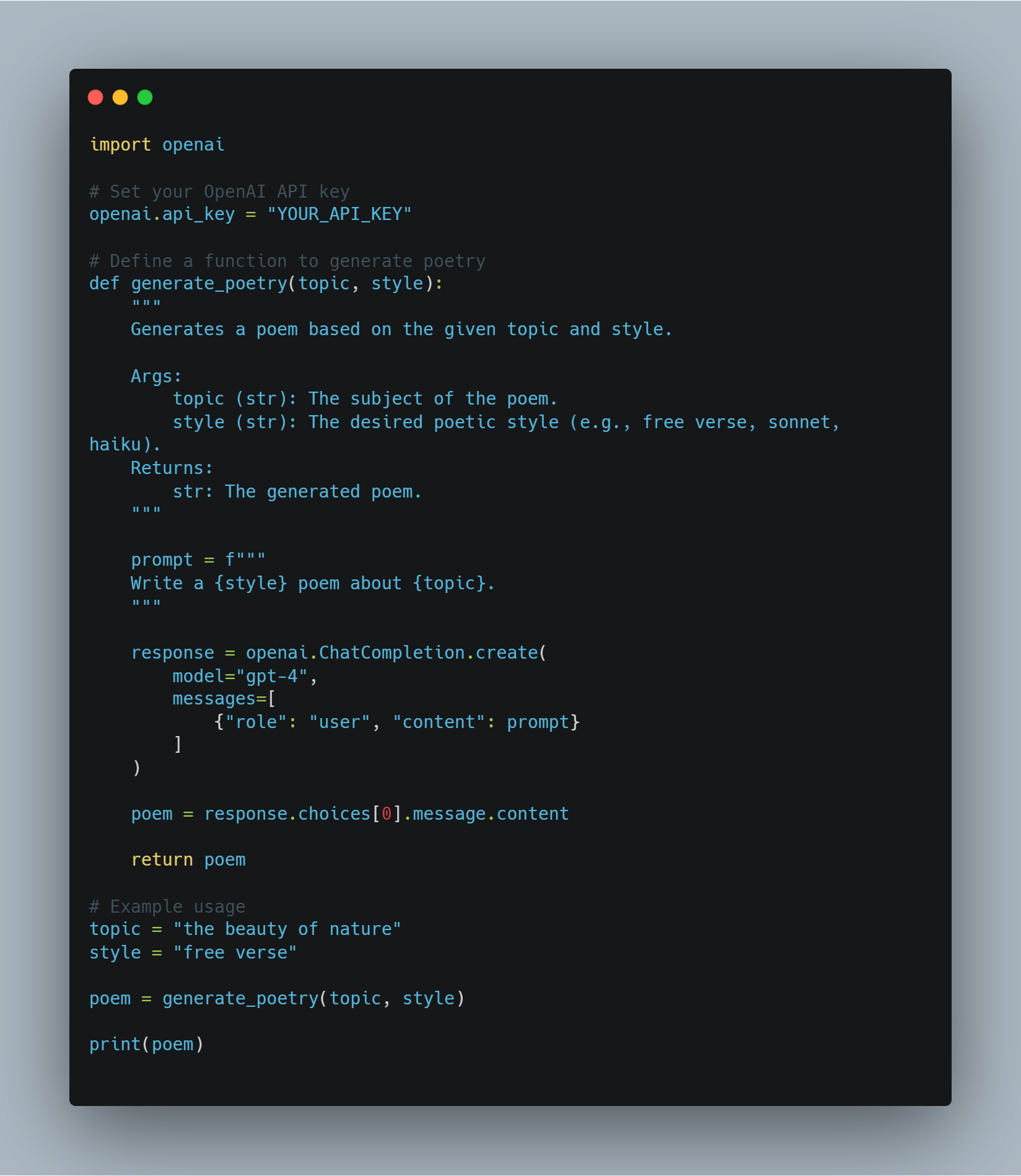

AI in Creative Industries

AI is making significant inroads into the creative industries, transforming how art, music, film, and literature are produced and consumed. With advancements in generative models, such as Generative Adversarial Networks (GANs) and transformer-based models, AI can now generate content that rivals human creativity.

For instance, AI can compose music that reflects specific genres or moods, create digital art that mimics the style of famous painters, or even draft narrative plots for films and novels.

In the advertising industry, AI is being used to generate personalized content that resonates with individual consumers, enhancing engagement and effectiveness.

But the rise of AI in creative fields also raises questions about authorship, originality, and the role of human creativity. As you engage with AI in these domains, it will be crucial to explore how AI can complement human creativity rather than replace it, fostering collaboration between humans and machines to produce innovative and impactful content.

Here's an example of how GPT-4 can be integrated into a Python project for creative tasks, specifically in the realm of writing. This code demonstrates how to leverage GPT-4's capabilities to generate creative text formats, like poetry.

import openai

# Set your OpenAI API key

openai.api_key = "YOUR_API_KEY"

# Define a function to generate poetry

def generate_poetry(topic, style):

"""

Generates a poem based on the given topic and style.

Args:

topic (str): The subject of the poem.

style (str): The desired poetic style (e.g., free verse, sonnet, haiku).

Returns:

str: The generated poem.

"""

prompt = f"""

Write a {style} poem about {topic}.

"""

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "user", "content": prompt}

]

)

poem = response.choices[0].message.content

return poem

# Example usage

topic = "the beauty of nature"

style = "free verse"

poem = generate_poetry(topic, style)

print(poem)

generate_poetry that takes topic and style as arguments, a prompt formation, API response handling, and example usage with the topic 'the beauty of nature' and style 'free verse'.Let’s see what’s going on here:

- Import OpenAI library: The code first imports the

openailibrary to access the OpenAI API. - Set API key: Replace

"YOUR_API_KEY"with your actual OpenAI API key. - Define

generate_poetryfunction: This function takes the poem'stopicandstyleas input and uses OpenAI's ChatCompletion API to generate the poem. - Construct the prompt: The prompt combines the

topicandstyleinto a clear instruction for GPT-4. - Send prompt to GPT-4: The code uses

openai.ChatCompletion.createto send the prompt to GPT-4 and receive the generated poem as a response. - Return the poem: The generated poem is then extracted from the response and returned by the function.

- Example usage: The code demonstrates how to call the

generate_poetryfunction with a specific topic and style. The resulting poem is then printed to the console.

AI-Powered Virtual Worlds

The development of AI-powered virtual worlds represents a significant leap in immersive experiences, where AI agents can create, manage, and evolve virtual environments that are both interactive and responsive to user input.

These virtual worlds, driven by AI, can simulate complex ecosystems, social interactions, and dynamic narratives, offering users a deeply engaging and personalized experience.

For example, in the gaming industry, AI can be used to create non-playable characters (NPCs) that learn from player behavior, adapting their actions and strategies to provide a more challenging and realistic experience.

Beyond gaming, AI-powered virtual worlds have potential applications in education, where virtual classrooms can be tailored to the learning styles and progress of individual students, or in corporate training, where realistic simulations can prepare employees for various scenarios.

The future of these virtual environments will depend on advancements in AI's ability to generate and manage vast, complex digital ecosystems in real-time, as well as on ethical considerations around user data and the psychological impacts of highly immersive experiences.

import random

from typing import List, Dict, Tuple

class VirtualWorld:

"""

Represents a simple AI-powered virtual world with dynamic environments and agents.

"""

def __init__(self, environment_size: Tuple[int, int], agent_types: List[str],

agent_properties: Dict[str, Dict]):

"""

Initializes the virtual world with specified parameters.

Args:

environment_size (Tuple[int, int]): Dimensions of the world (width, height).

agent_types (List[str]): List of different agent types (e.g., "player", "npc", "animal").

agent_properties (Dict[str, Dict]): Dictionary mapping agent types to their properties,

including initial number, movement speed, and other attributes.

"""

self.environment = [[' ' for _ in range(environment_size[0])] for _ in range(environment_size[1])]

self.agents = []

self.agent_types = agent_types

self.agent_properties = agent_properties

# Initialize agents

for agent_type in agent_types:

for _ in range(agent_properties[agent_type]['initial_number']):

self.add_agent(agent_type)

def add_agent(self, agent_type: str):

"""

Adds a new agent of the specified type to the world.

Args:

agent_type (str): The type of agent to add.

"""

# Assign random position within the environment

x = random.randint(0, len(self.environment[0]) - 1)

y = random.randint(0, len(self.environment) - 1)

# Create and add the agent

agent = Agent(agent_type, (x, y), self.agent_properties[agent_type])

self.agents.append(agent)

def update(self):

"""

Updates the virtual world for a single time step.

This involves moving agents, handling interactions, and potentially modifying the environment.

"""

# Move agents (simplified movement for demonstration)

for agent in self.agents:

agent.move(self.environment)

# TODO: Implement more complex logic for interactions, environment changes, etc.

def display(self):

"""

Prints a simple representation of the virtual world.

"""

for row in self.environment:

print(''.join(row))

class Agent:

"""

Represents a single agent in the virtual world.

"""

def __init__(self, agent_type: str, position: Tuple[int, int], properties: Dict):

"""

Initializes an agent with its type, position, and properties.

Args:

agent_type (str): The type of the agent.

position (Tuple[int, int]): The agent's initial position in the world.

properties (Dict): A dictionary containing the agent's properties.

"""

self.agent_type = agent_type

self.position = position

self.properties = properties

def move(self, environment: List[List[str]]):

"""

Moves the agent within the environment based on its properties.

Args:

environment (List[List[str]]): The environment's grid representation.

"""

# Determine movement direction (random for this example)

direction = random.choice(['N', 'S', 'E', 'W'])

# Apply movement based on direction

if direction == 'N' and self.position[1] > 0:

self.position = (self.position[0], self.position[1] - 1)

elif direction == 'S' and self.position[1] < len(environment) - 1:

self.position = (self.position[0], self.position[1] + 1)

elif direction == 'E' and self.position[0] < len(environment[0]) - 1:

self.position = (self.position[0] + 1, self.position[1])

elif direction == 'W' and self.position[0] > 0:

self.position = (self.position[0] - 1, self.position[1])

# Update the environment to reflect the agent's new position

environment[self.position[1]][self.position[0]] = self.agent_type[0]

# Example Usage

if __name__ == "__main__":

# Define world parameters

environment_size = (10, 10)

agent_types = ["player", "npc", "animal"]

agent_properties = {

"player": {"initial_number": 1, "movement_speed": 2},

"npc": {"initial_number": 5, "movement_speed": 1},

"animal": {"initial_number": 10, "movement_speed": 0.5},

}

# Create the virtual world

world = VirtualWorld(environment_size, agent_types, agent_properties)

# Simulate the world for several steps

for _ in range(10):

world.update()

world.display()

print() # Add an empty line for better readability

Here’s what’s going on in this code:

1. VirtualWorld Class:

- Defines the core of the virtual world.

- Contains the environment grid, a list of agents, and agent-related information.

__init__(): Initializes the world with size, agent types, and properties.add_agent(): Adds a new agent of a specified type to the world.update(): Performs a single time step update of the world.- It currently just moves agents, but you can add complex logic for agent interactions, environment changes, etc.

display(): Prints a basic representation of the environment.

2. Agent Class:

- Represents an individual agent within the world.

__init__(): Initializes the agent with its type, position, and properties.move(): Handles agent movement, updating its position within the environment. This method currently provides a simple random movement, but can be expanded to include complex AI behaviors.

3. Example Usage:

- Sets up world parameters like size, agent types, and their properties.

- Creates a VirtualWorld object.

- Executes the

update()method multiple times to simulate the world's evolution. - Calls

display()after each update to visualize the changes.

4. Enhancements:

- More Complex Agent AI: Implement more sophisticated AI for agent behavior. You can use:

- Pathfinding Algorithms: Help agents navigate the environment efficiently.

- Decision Trees/Machine Learning: Enable agents to make more intelligent decisions based on their surroundings and goals.

- Reinforcement Learning: Teach agents to learn and adapt their behavior over time.

- Environment Interaction: Add more dynamic elements to the environment, like obstacles, resources, or points of interest.

- Agent-to-Agent Interaction: Implement interactions between agents, such as communication, combat, or cooperation.

- Visual Representation: Use libraries like Pygame or Tkinter to create a visual representation of the virtual world.

This example is a basic foundation for creating an AI-powered virtual world. The level of complexity and sophistication can be further expanded to match your specific needs and creative goals.

Neuromorphic Computing and AI

Neuromorphic computing, inspired by the structure and functioning of the human brain, is set to revolutionize AI by offering new ways to process information efficiently and in parallel.

Unlike traditional computing architectures, neuromorphic systems are designed to mimic the neural networks of the brain, enabling AI to perform tasks such as pattern recognition, sensory processing, and decision-making with greater speed and energy efficiency.

This technology holds immense promise for developing AI systems that are more adaptive, capable of learning from minimal data, and effective in real-time environments.

For instance, in robotics, neuromorphic chips could enable robots to process sensory inputs and make decisions with a level of efficiency and speed that current architectures cannot match.

The challenge moving forward will be to scale neuromorphic computing to handle the complexity of large-scale AI applications, integrating it with existing AI frameworks to fully leverage its potential.

AI Agents in Space Exploration

AI agents are increasingly playing a crucial role in space exploration, where they are tasked with navigating harsh environments, making real-time decisions, and conducting scientific experiments autonomously.

As missions venture further into deep space, the need for AI systems that can operate independently of Earth-based control becomes more pressing. Future AI agents will be designed to handle the unpredictability of space, such as unanticipated obstacles, changes in mission parameters, or the need for self-repair.

For instance, AI could be used to guide rovers on Mars to autonomously explore terrain, identify scientifically valuable sites, and even drill for samples with minimal input from mission control. These AI agents could also manage life-support systems on long-duration missions, optimize energy usage, and adapt to the psychological needs of astronauts by providing companionship and mental stimulation.

The integration of AI in space exploration not only enhances mission capabilities but also opens up new possibilities for human exploration of the cosmos, where AI will be an indispensable partner in the quest to understand our universe.